AERIS: Argonne's Earth Systems Model

Sam Foreman 2025-10-08

- 🌎 AERIS

- High-Level Overview of AERIS

- Contributions

- Model Overview

- Windowed Self-Attention

- Model Architecture: Details

- Issues with the Deterministic Approach

- Transitioning to a Probabilistic Model

- Sequence-Window-Pipeline Parallelism

SWiPe - Aurora

- AERIS: Scaling Results

- Hurricane Laura

- S2S: Subsseasonal-to-Seasonal Forecasts

- Seasonal Forecast Stability

- Next Steps

- References

- Extras

- Acknowledgements

🌎 AERIS

Sequence-Window-Pipeline Parallelism SWiPe

SWiPeis a novel parallelism strategy for Swin-based Transformers- Hybrid 3D Parallelism strategy, combining:

- Sequence parallelism (

SP) - Window parallelism (

WP) - Pipeline parallelism (

PP)

- Sequence parallelism (

Figure 6

Figure 7: SWiPe Communication Patterns

Aurora

Table 3: Aurora1 Specs

| Property | Value |

|---|---|

| Racks | 166 |

| Nodes | 10,624 |

| XPUs2 | 127,488 |

| CPUs | 21,248 |

| NICs | 84,992 |

| HBM | 8 PB |

| DDR5c | 10 PB |

Figure 8: Aurora: Fact Sheet.

AERIS: Scaling Results

Figure 9: AERIS: Scaling Results

- 10 EFLOPs (sustained) @ 120,960 GPUs

- See (Hatanpää et al. (2025)) for additional details

- arXiv:2509.13523

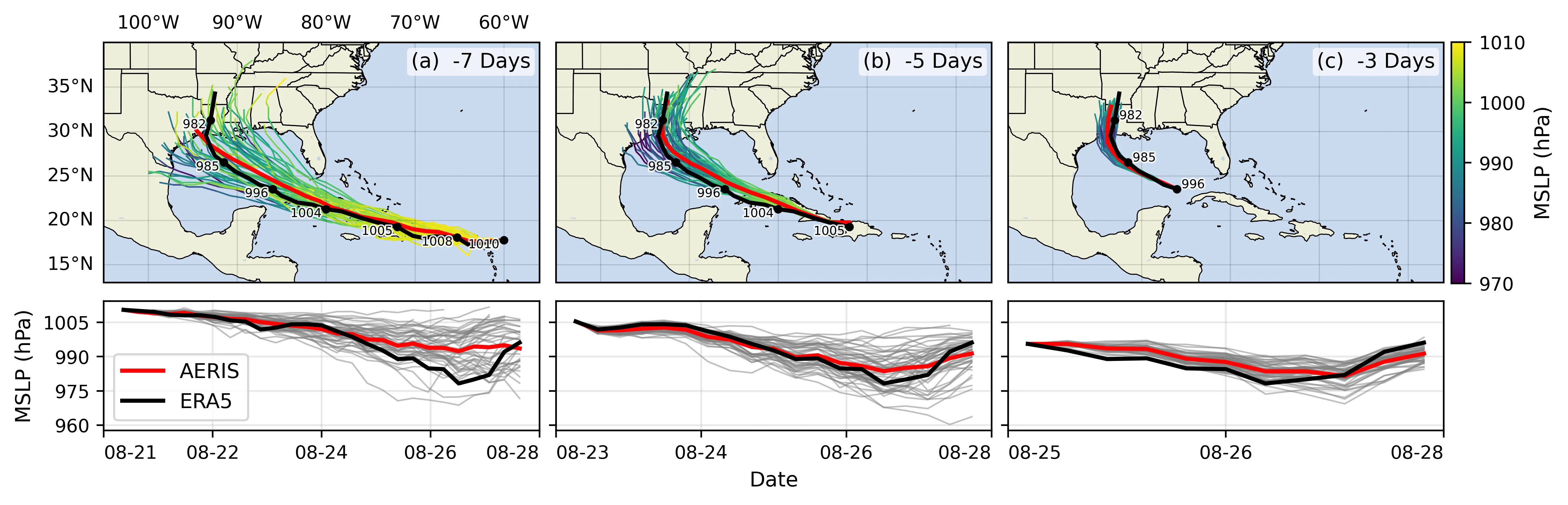

Hurricane Laura

Figure 10: Hurricane Laura tracks (top) and intensity (bottom). Initialized 7(a), 5(b) and 3(c) days prior to 2020-08-28T00z.

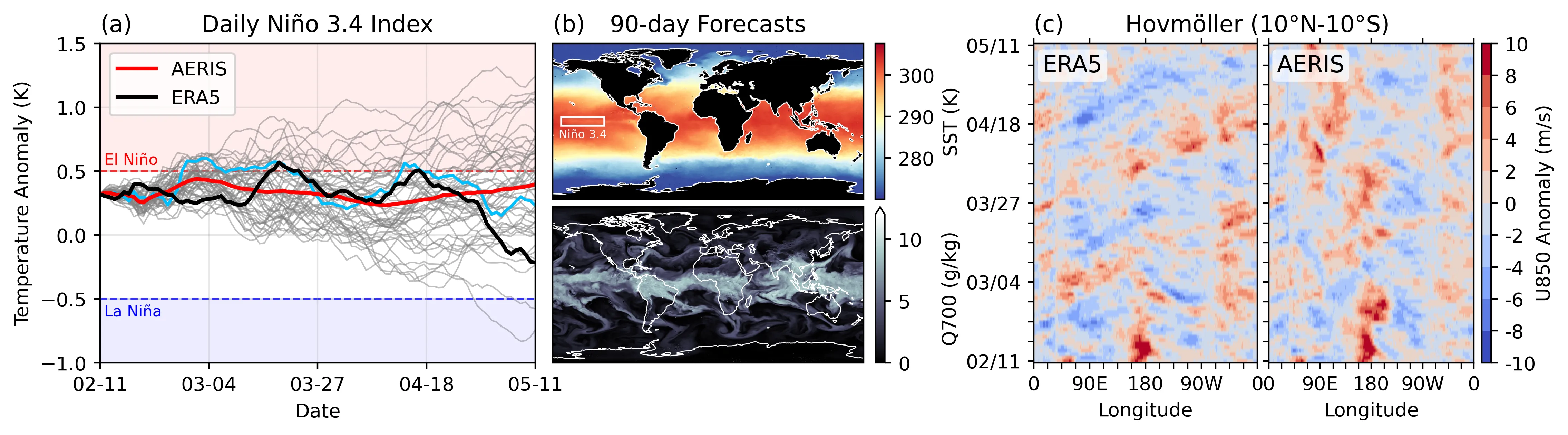

S2S: Subsseasonal-to-Seasonal Forecasts

[!IMPORTANT]

🌡️ S2S Forecasts

We demonstrate for the first time, the ability of a generative, high resolution (native ERA5) diffusion model to produce skillful forecasts on the S2S timescales with realistic evolutions of the Earth system (atmosphere + ocean).

- To assess trends that extend beyond that of our medium-range weather forecasts (beyond 14-days) and evaluate the stability of our model, we made 3,000 forecasts (60 initial conditions each with 50 ensembles) out to 90 days.

- AERIS was found to be stable during these 90-day forecasts

- Realistic atmospheric states

- Correct power spectra even at the smallest scales

Seasonal Forecast Stability

Figure 11: S2S Stability: (a) Spring barrier El Niño with realistic ensemble spread in the ocean; (b) qualitatively sharp fields of SST and Q700 predicted 90 days in the future from the

closest ensemble member to the ERA5 in (a); and (c) stable Hovmöller diagrams of U850 anomalies (climatology removed; m/s), averaged between 10°S and 10°N, for a 90-day rollout.

Next Steps

- Swift: Swift, a single-step consistency model that, for the first time, enables autoregressive finetuning of a probability flow model with a continuous ranked probability score (CRPS) objective

References

- What are Diffusion Models? | Lil’Log

- Step by Step visual introduction to Diffusion Models. - Blog by Kemal Erdem

- Understanding Diffusion Models: A Unified Perspective

Hatanpää, Väinö, Eugene Ku, Jason Stock, et al. 2025. AERIS: Argonne Earth Systems Model for Reliable and Skillful Predictions. https://arxiv.org/abs/2509.13523.

Price, Ilan, Alvaro Sanchez-Gonzalez, Ferran Alet, et al. 2024. GenCast: Diffusion-Based Ensemble Forecasting for Medium-Range Weather. https://arxiv.org/abs/2312.15796.

Extras

Overview of Diffusion Models

Goal: We would like to (efficiently) draw samples from a (potentially unknown) target distribution .

- Given , we can construct a forward diffusion

process by gradually adding noise to over steps:

.

-

Step sizes controlled by a variance schedule , with:

-

Diffusion Model: Forward Process

-

Introduce:

We can write the forward process as:

-

We see that the mean

Acknowledgements

This research used resources of the Argonne Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC02-06CH11357.

Footnotes

-

Each node has 6 Intel Data Center GPU Max 1550 (code-named “Ponte Vecchio”) tiles, with 2 XPUs per tile. ↩